Have AI LLMs Hit a Plateau?

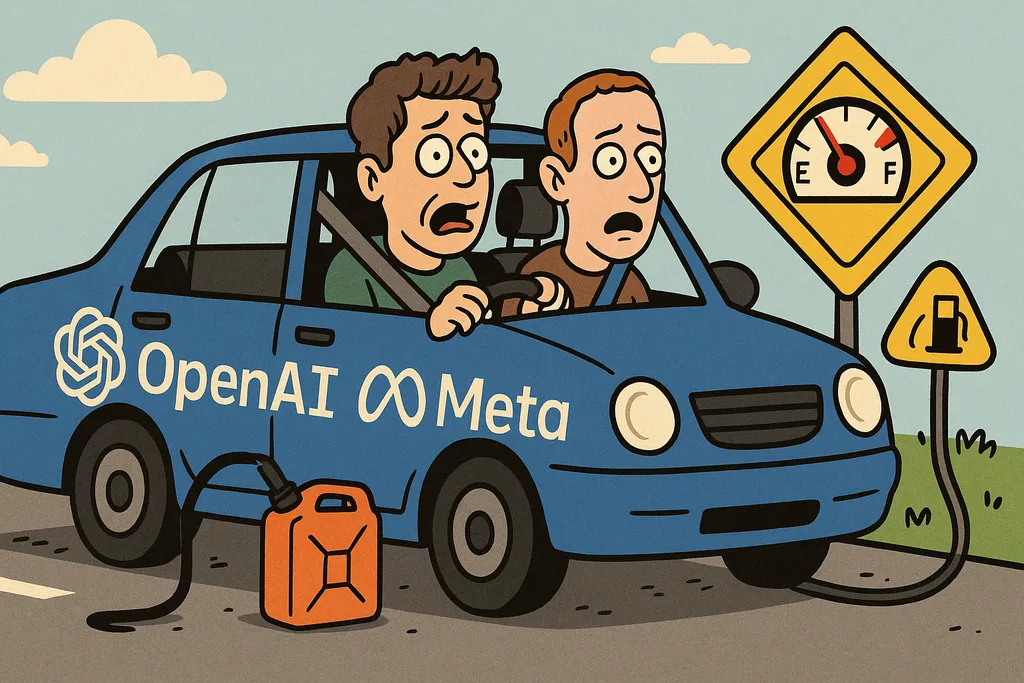

A growing chorus of experts and industry insiders suggests that progress in large language models (LLMs) may be slowing—not due to lack of ambition, but because of hard limits like diminishing returns, rising energy costs, and shrinking ROI.

Recent releases of cutting-edge AI systems have shown only marginal improvements over their predecessors, reigniting fears that the industry is approaching a plateau. Constraints such as limited high-quality training data, soaring compute demands, and the flattening impact of brute-force scaling are pointing toward a potential AI tipping point.

Industry leaders warn that the cost of AI development is converging with the cost of energy itself. Electricity and processing power now stand as the most significant bottlenecks. Some estimates suggest that sustaining continued AI growth could demand the energy equivalent of dozens of new power plants, straining grids already under pressure from climate commitments and rising demand.

Indeed, energy consumption is climbing steeply. AI workloads already account for a significant share of global data center power use, with projections showing that figure could double within a year. Building and operating AI-scale facilities is stretching utilities, raising concerns that ordinary consumers may ultimately face higher electricity costs as a knock-on effect.

At the same time, researchers highlight the diminishing returns of “scaling laws.” The principle that bigger datasets and more powerful GPUs automatically yield smarter models is showing cracks. Without major breakthroughs in efficiency, pushing model performance further could take decades—or require computing resources on a planetary scale.

Comments

No comments yet. Be the first to comment!

Leave a Comment